AI and Quantum Computing Risks

Government Integration, Existential Threats, and Societal Transformation

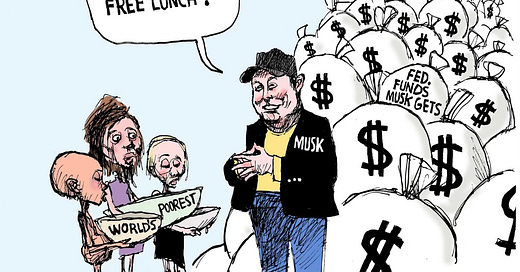

Elon Musk’s AI Integration into Government Systems: Privacy and Civil Liberty Risks

Elon Musk’s Department of Government Efficiency (DOGE) has rapidly expanded the use of his proprietary AI tools, including the Grok chatbot, across federal agencies. Internal documents and whistleblower accounts reveal that DOGE staff have accessed highly sensitive federal databases containing medical records, financial data, and other personal information on millions of Americans191417. This unprecedented access raises severe privacy concerns, as Grok—developed by Musk’s xAI—could analyze or retain confidential data, potentially violating the Privacy Act of 1974 and exposing individuals to exploitation19.

Key risks include:

Conflict of Interest: Musk’s dual role as a federal contractor (via SpaceX, Tesla, and Starlink) and government advisor created opportunities for corporate espionage or preferential treatment in federal contracts114.

Lack of Oversight: DOGE operates with minimal transparency, using encrypted platforms like Signal to avoid public records laws and bypassing standard vetting processes for data access917.

Surveillance Overreach: DOGE has allegedly deployed AI to monitor federal employees’ communications for “anti-Trump sentiment,” blurring the line between governance and political targeting17.

Cybersecurity experts warn that merging quantum computing (QC) with such systems could exacerbate risks. Quantum-enabled AI might eventually break current encryption standards, rendering sensitive data vulnerable to harvesting by adversaries12.

Existential Threats: Scientific Consensus on AI and QC

A growing consensus among AI researchers warns of catastrophic risks from advanced AI and QC:

Extinction-Level Scenarios: A 2024 survey of 2,778 AI experts found a median 5% chance that AI could cause human extinction, with 10% of respondents assigning at least a 25% probability1618. The U.S. State Department’s 2024 report echoed these concerns, stating AI could pose an “extinction-level threat” through unaligned superintelligence or weaponized applications311.

Quantum Computing Risks: QC’s ability to crack encryption threatens global financial systems, healthcare data, and military communications. A single quantum attack on U.S. banking infrastructure could cause $2 trillion in damages12. Post-quantum cryptography efforts are underway, but adoption lags behind QC advancements412.

Employment Disruption: Immediate and Severe Impacts

AI-driven automation is projected to reshape labor markets dramatically by 2030:

Job Losses: Goldman Sachs estimates 300 million jobs globally could be automated, with 23.5% of U.S. companies already replacing workers with ChatGPT67. McKinsey predicts 12 million Americans will need to switch careers by 2030, particularly in administrative, customer service, and manufacturing roles513.

Worst-Case Scenarios: Middle-income jobs face a “hollowing out,” with 30% of tasks across all sectors automatable by 2027. Without retraining programs, structural unemployment could destabilize economies613.

AI in Warfare and Legal Accountability

AI is transforming military operations and international law:

Battlefield Applications: AI systems now collect real-time data for war crimes prosecutions, but machine-learning algorithms struggle with contextual nuance, risking flawed evidence in tribunals8.

Autonomous Weapons: The integration of AI into defense systems raises ethical dilemmas, as autonomous drones and cyberweapons could escalate conflicts beyond human control819.

Global Competition for AI and QC Dominance

Nations recognize that leadership in AI and QC is existential:

U.S. vs. China: The U.S. faces pressure to lead in post-quantum cryptography and AI governance. The 2024 National Quantum Initiative mandates federal adoption of quantum-resistant systems by 203512.

Corporate Power: Firms like Google (Willow quantum chip) and Microsoft (Majorana 1) are racing to dominate QC infrastructure, which will dictate future economic and military superiority12.

Scientific Community’s Call to Action

Researchers emphasize urgent measures to mitigate risks:

Regulation: The 2024 AI Executive Order and EU AI Act aim to balance innovation with safeguards, but DOGE has dismantled federal AI oversight frameworks, prioritizing speed over safety11019.

Ethical AI Development: Experts advocate for “differential privacy” protocols and bans on autonomous weapons, though political will remains lacking318.

Conclusion

The confluence of DOGE’s unchecked government AI integration, existential technological risks, and rapid workforce automation presents a pivotal challenge for democracy and global stability. Without transparent governance, robust encryption standards, and international cooperation, AI and QC could erode civil liberties, escalate military conflicts, and destabilize societies. The scientific community’s warnings underscore the need for immediate action to align technological progress with human survival.

See also:

YouTube:

Further Reading:

The Silent Coup: How the Broligarchy Weaponizes Data, AI, and Tribalism to Rewire Democracy

The digital age has birthed a new aristocracy: the broligarchy—tech titans like Elon Musk, Mark Zuckerberg, and Peter Thiel—who wield algorithmic might, surveillance capitalism, and political patronage to reshape governance. Their tools? AI-driven disinformation, data harvesting, and legal intimidation. Their endgame? A post-democratic order where corpo…

https://www.cnn.com/2024/03/12/business/artificial-intelligence-ai-report-extinction

https://www.npr.org/2025/03/11/nx-s1-5305054/doge-elon-musk-security-data-information-privacy

https://www.axios.com/2025/02/05/musk-doge-ai-government-efficiency-safeguards

https://en.wikipedia.org/wiki/Existential_risk_from_artificial_intelligence

https://www.businessinsider.com/ai-disruption-job-market-covid-mckinsey-2024-6

https://www.the-independent.com/news/world/americas/us-politics/elon-musk-doge-grok-ai-b2756947.html

https://www.wired.com/story/elon-musk-lieutenant-gsa-ai-agency/

https://www.rand.org/pubs/commentary/2025/05/could-ai-really-kill-off-humans.html

https://techpolicy.press/musk-ai-and-the-weaponization-of-administrative-error

https://civilrights.org/2025/03/20/doge-government-data-privacy/

https://aiimpacts.org/wp-content/uploads/2023/04/Thousands_of_AI_authors_on_the_future_of_AI.pdf

https://i-estimate.com/wp-content/uploads/2024/06/Quantum-Computing-and-Existential-Threats-2.pdf

https://www.mckinsey.com/mgi/our-research/generative-ai-and-the-future-of-work-in-america

https://www.forbes.com/sites/bernardmarr/2024/09/17/how-ai-is-used-in-war-today/

https://www.lawfaremedia.org/article/how-will-artificial-intelligence-impact-battlefield-operations

https://lieber.westpoint.edu/emerging-technologies-collection-battlefield-evidence/

https://www.cnas.org/press/press-release/cnas-launches-new-initiative-on-ai-in-future-warfare

I have been researching the AI situation. The “big beautiful bill” working its way through congress has a provision that prohibits states from enacting regulations covering AI. Yes, innovation is important and yes, we need to beat China but we also need guardrails.

I love your newsletter!